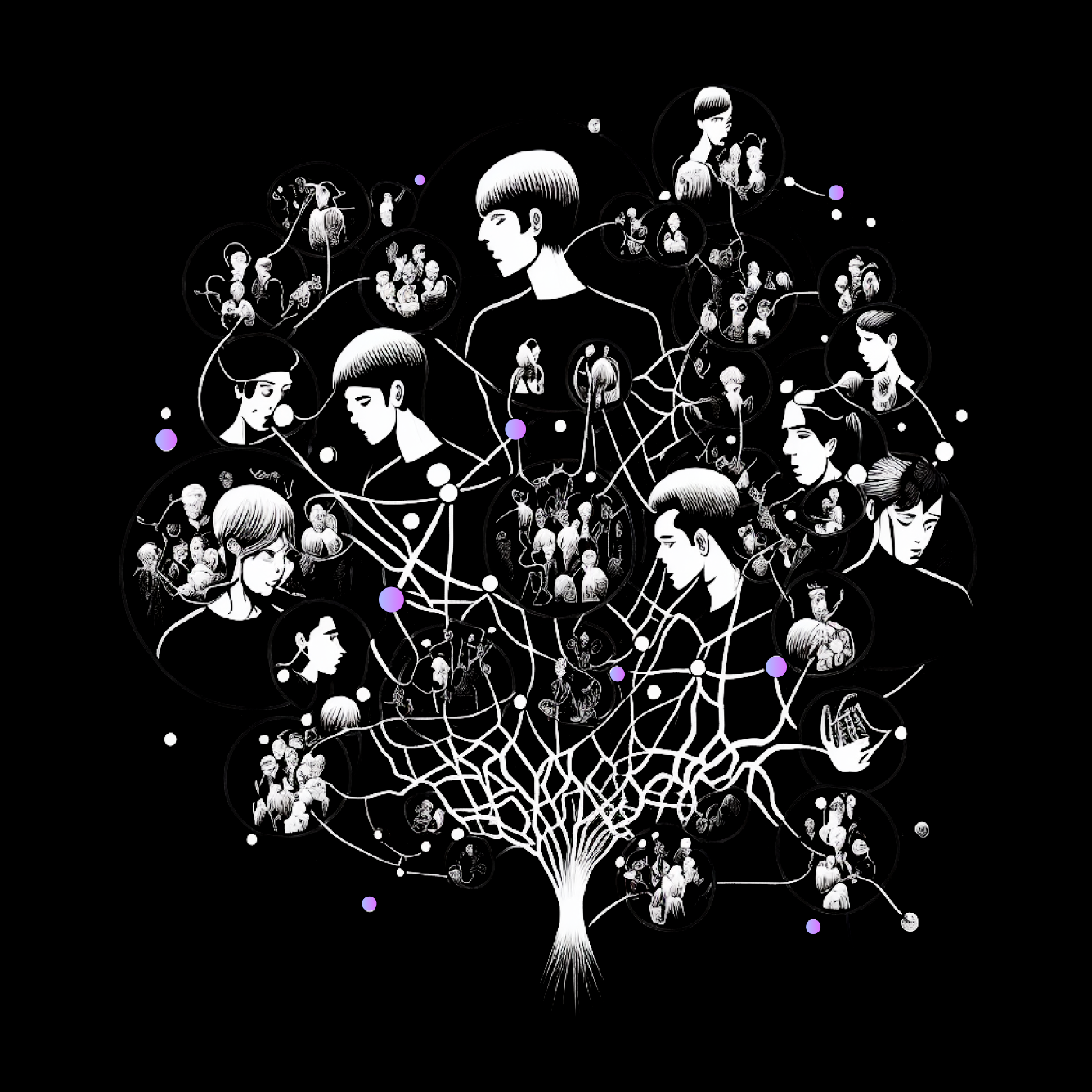

ADAM is a framework for building distributed social spaces which replaces the concept of apps. It's a social network engine, and a new distributed web in which all these apps interoperate seamlessly.

The first social network

Current social networks are monolithic black boxes, making decisions on your behalf. The ADAM Layer mimics the real world through human networks and languages. No servers, just people making decisions, together.

Bring your own technology. The ADAM Layer enables developers seamless interaction with various technologies such as Holochain, blockchains, other decentralized systems, centralized APIs, and more.

Users and app developers can evolve the existing network by creating or extending components. Communities are able to branch-off into their specific sub-culture without losing interoperability with the rest.

Get Started

Learn ADAM through our interactive tutorial tree, showing how your data changes with each step.

At its core, the ADAM Layer is a meta-ontology defining 3 classes: Agents, Languages and Perspectives. These form a spanning-layer that enables many-to-many mappings between user-interfaces (i.e. apps) and existing web technologies wrapped in "Languages". Apps interface with "Perspectives" which are private and locally stored graph databases associating data across different Languages.

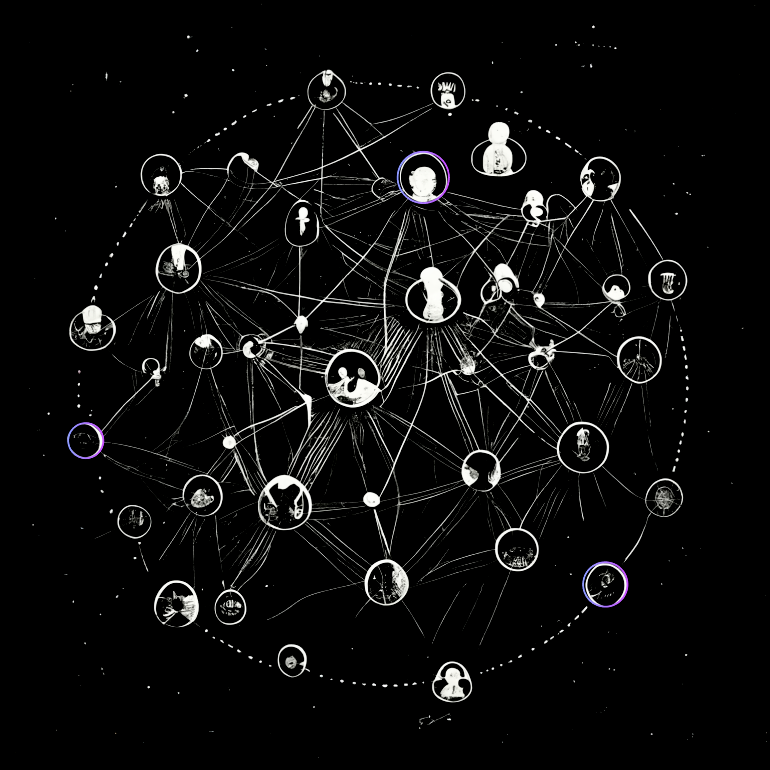

Neighbourhoods

A shared perspective

Private Perspectives can be turned into shared Neighbourhoods where users can work together to create shared meaning. Neighbourhoods are group collaboration spaces that can be used for sharing any kind of data such as text messages, to-do lists, or calendars.

Social Organisms

A higher level of collaboration

Neighbourhoods can become Social Organisms by incorporating Social DNA – code embedded in the Neighbourhood that identifies and modifies patterns in the semantic graph. As it is not specific to one app but rather to the group, it also explicitly defines the expectations of the social system. For example, “What are the meaningful interactions in this space?” or “Where should decision-making processes be stored in the shared graph?”.